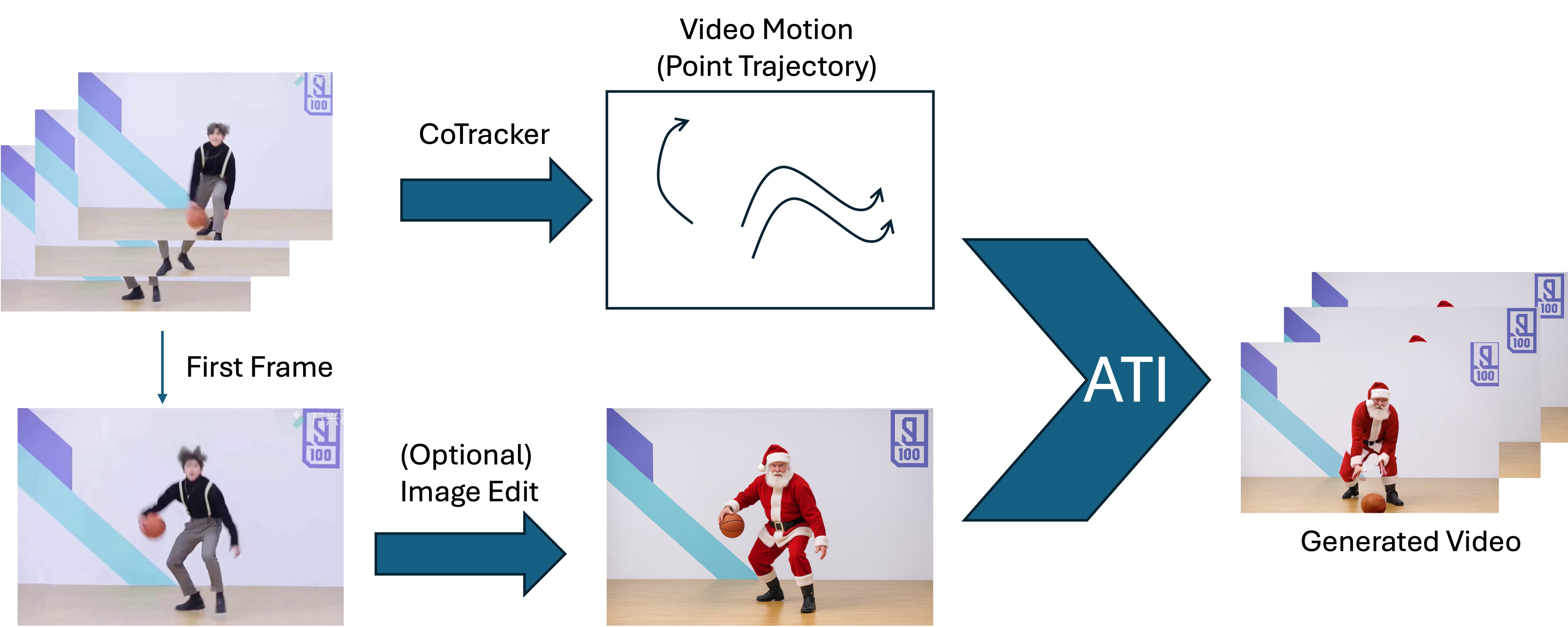

We present a trajectory-based motion control framework that unifies object, local and camera movements in video generation. By embedding user-defined keypoint paths into pretrained image-to-video models via a lightweight motion injector, our approach produces temporally coherent, semantically aligned motion. It excels across tasks—motion brushes, dynamic viewpoints and precise local deformations—offering superior controllability and visual quality compared to prior and commercial methods, while remaining compatible with diverse state-of-the-art backbones.

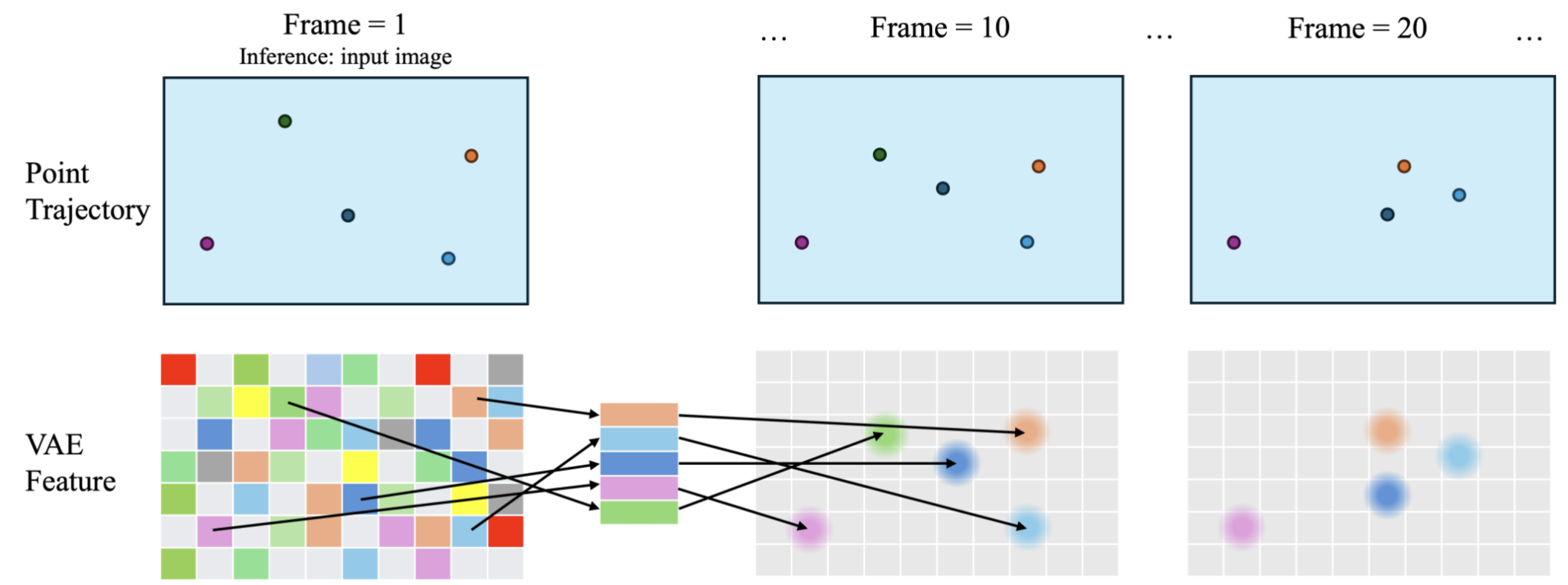

ATI enables fine-grained feature-level Instruction of Trajectories. Specifically, ATI introduces a Gaussian-based motion injector to encode trajectory signals, spanning local, object-level, and camera motion, directly into the latent space of a pretrained image-to-video diffusion model. This enables unified and continuous control over both object and camera dynamics.

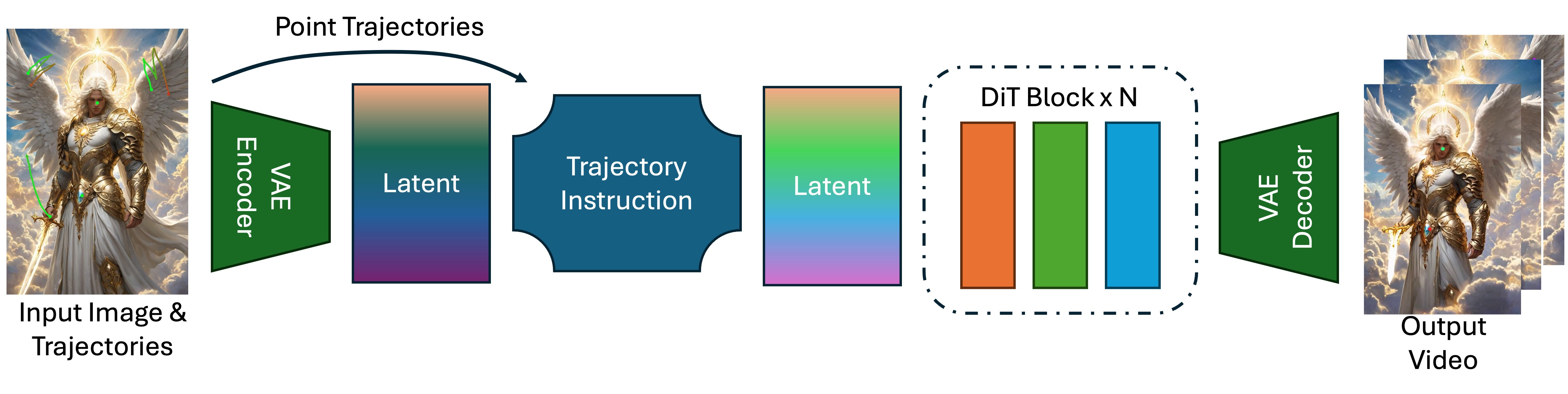

ATI generates the videos from flexible input trajectories, enabling flexible object motion or cameral control. ATI takes an image and user specified trajectories as inputs. The point-wise trajectories are injected into the latent condition for the generation. Videos are decoded from the latent denoised from the DiT.

Trajectory Instruction module computes a latent feature from a point’s trajectory. During inference, given the point’s location in the first frame (i.e., the input image), we sample the feature at that location using bilinear interpolation. We then compute a spatial Gaussian distribution for each visible point on its corresponding location in every subsequent frame.

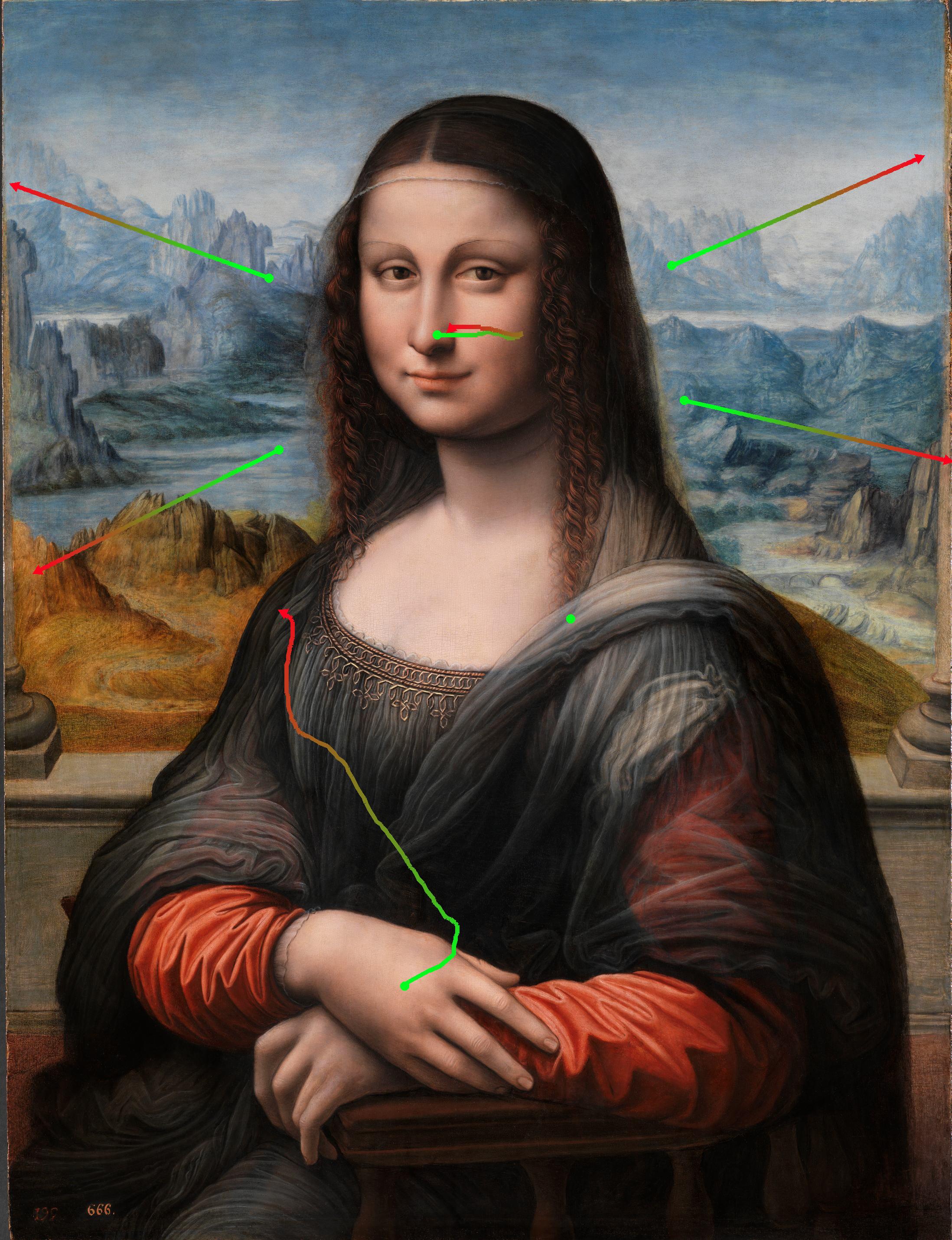

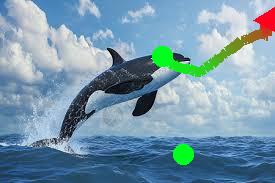

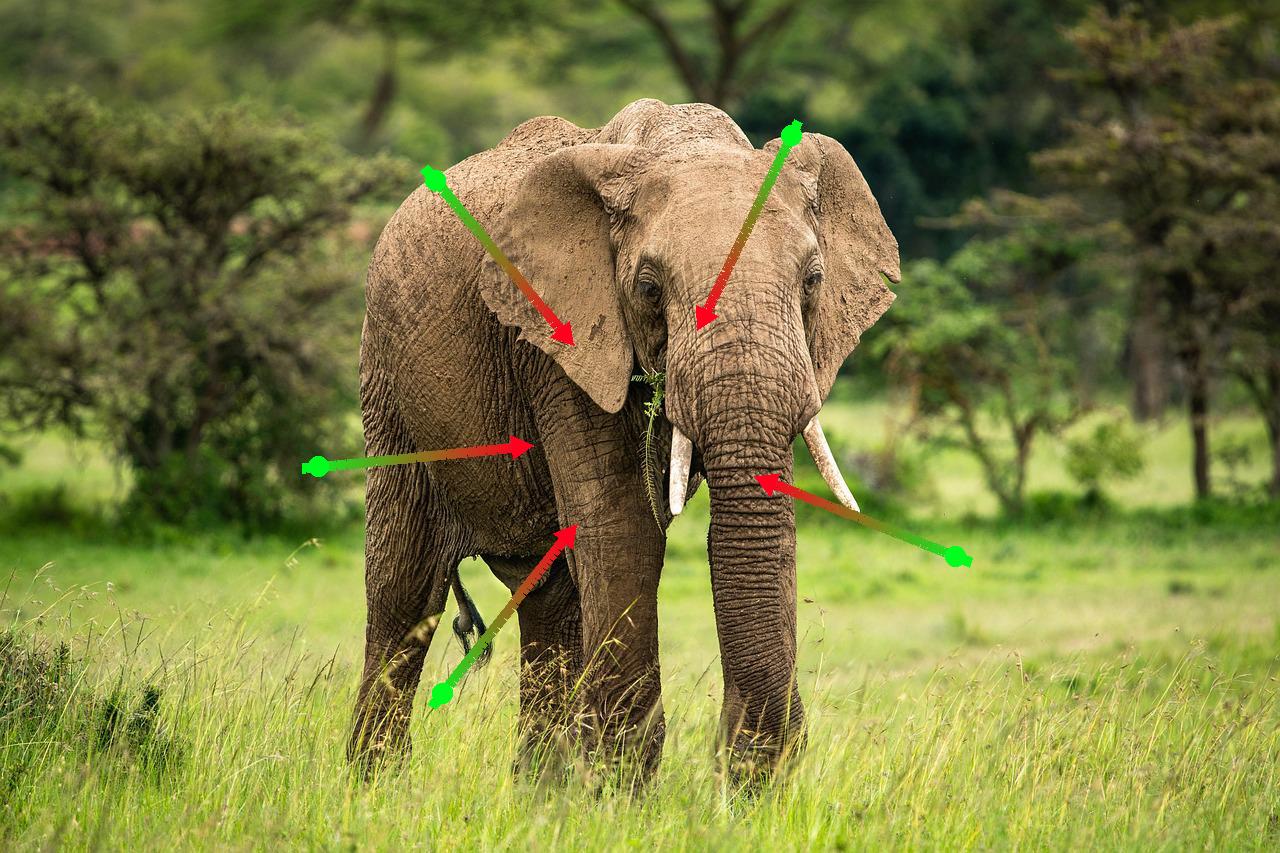

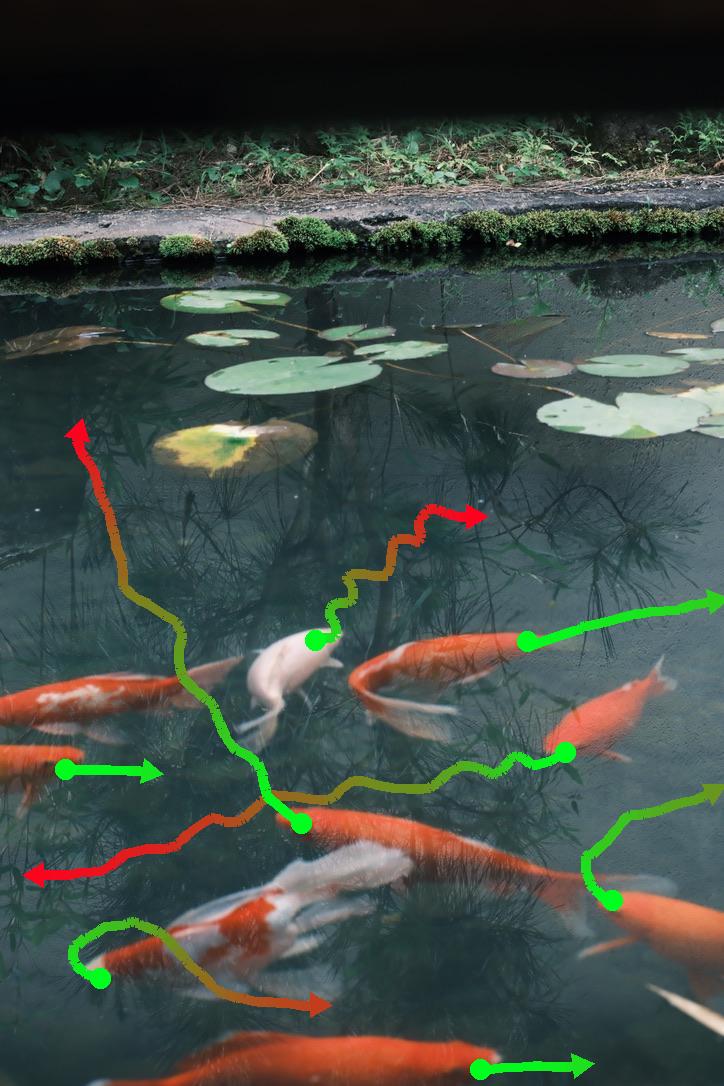

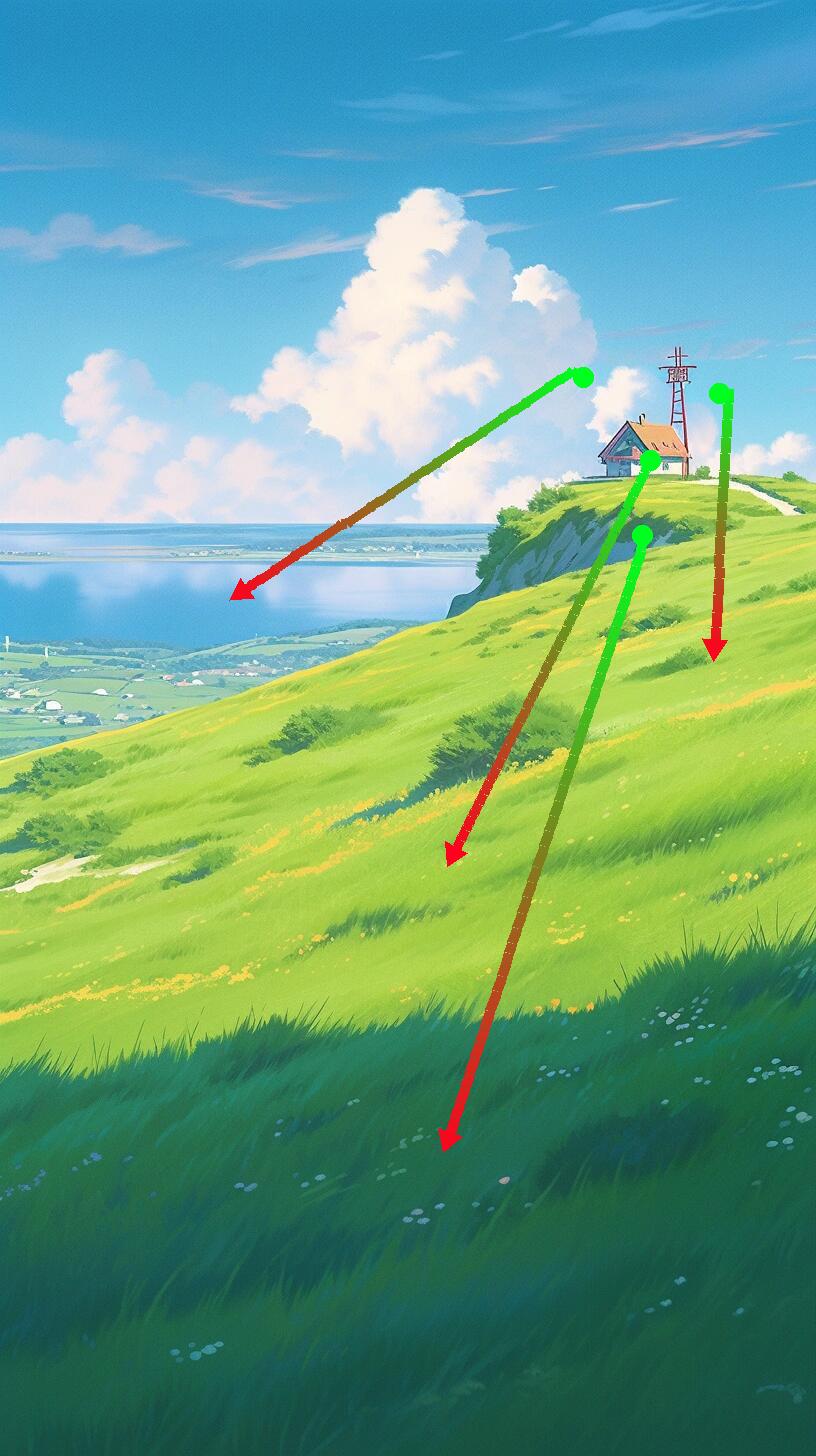

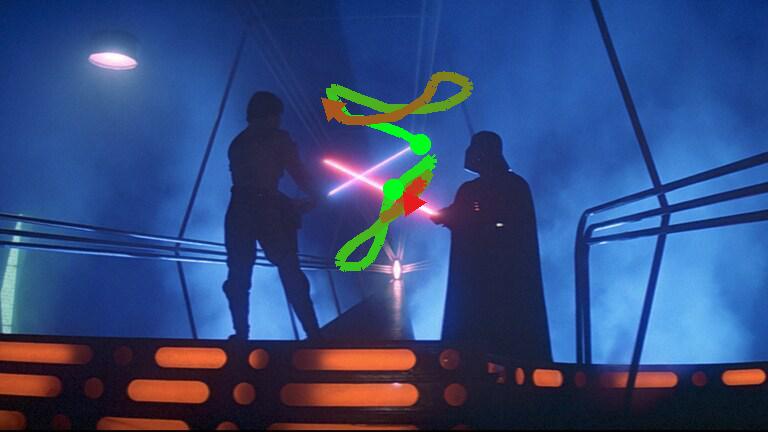

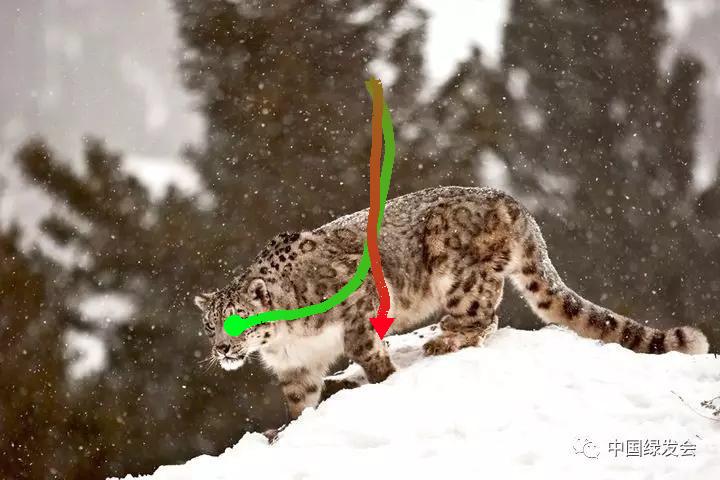

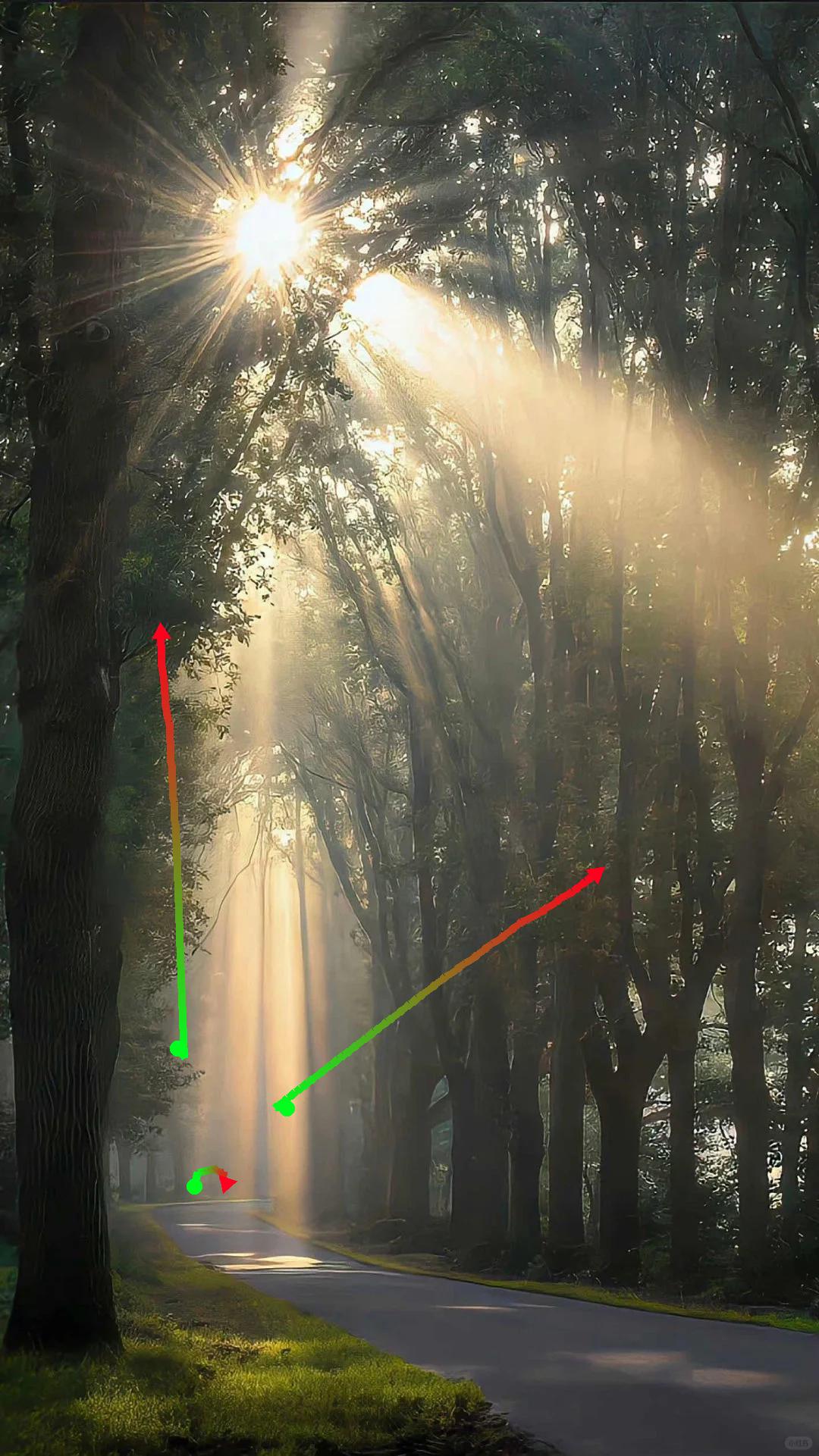

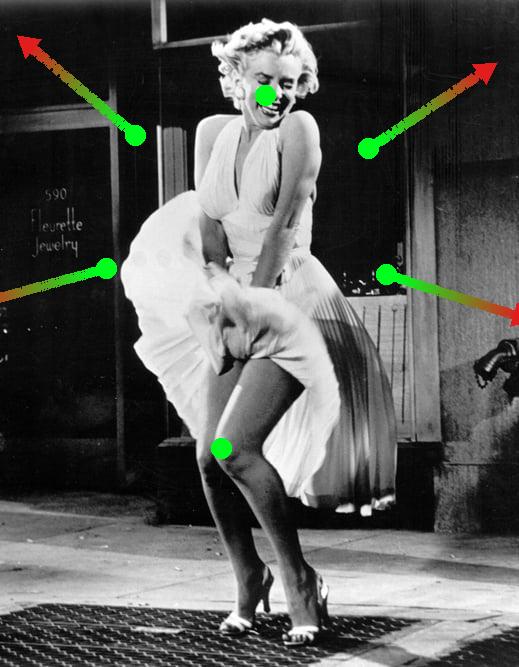

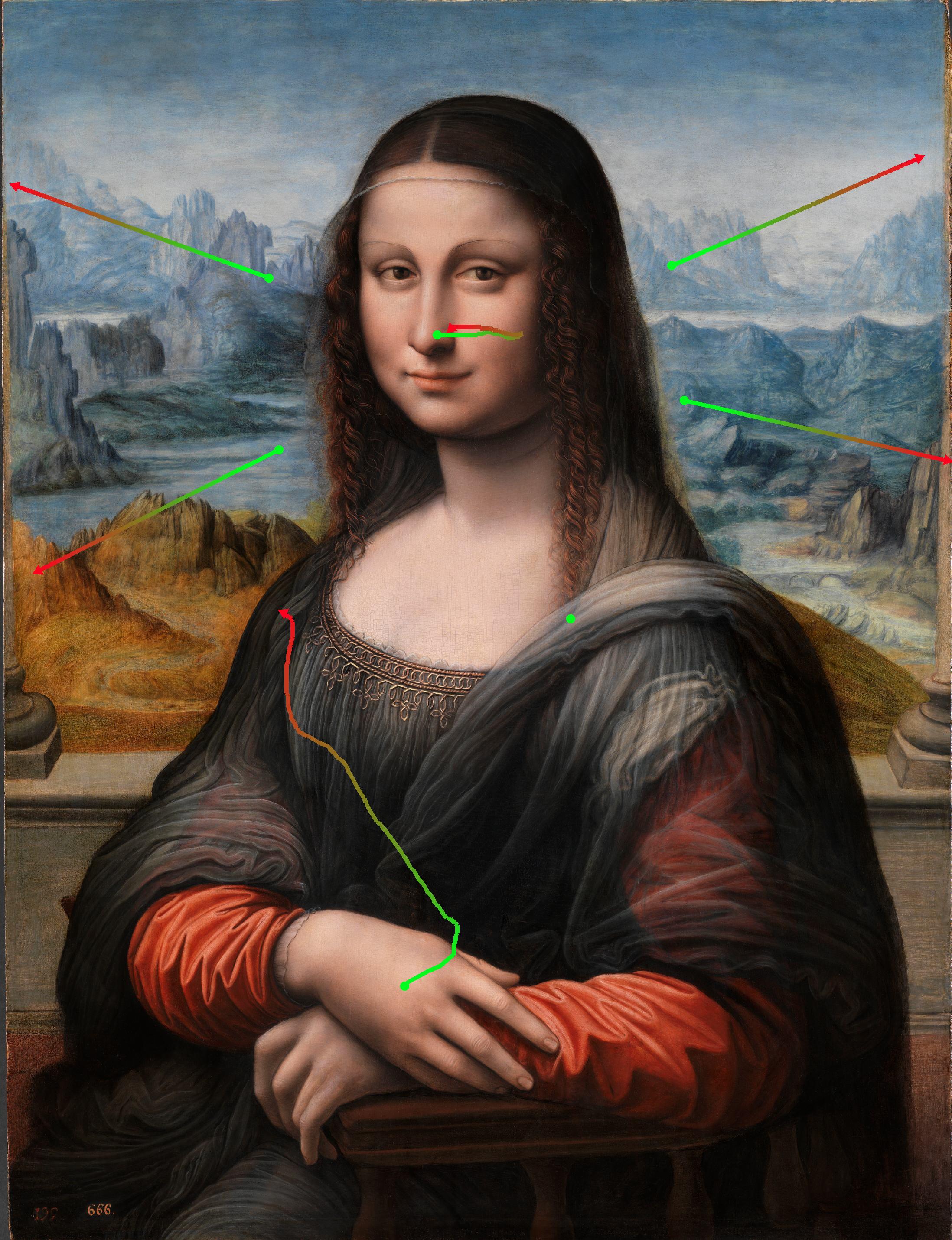

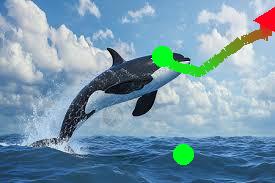

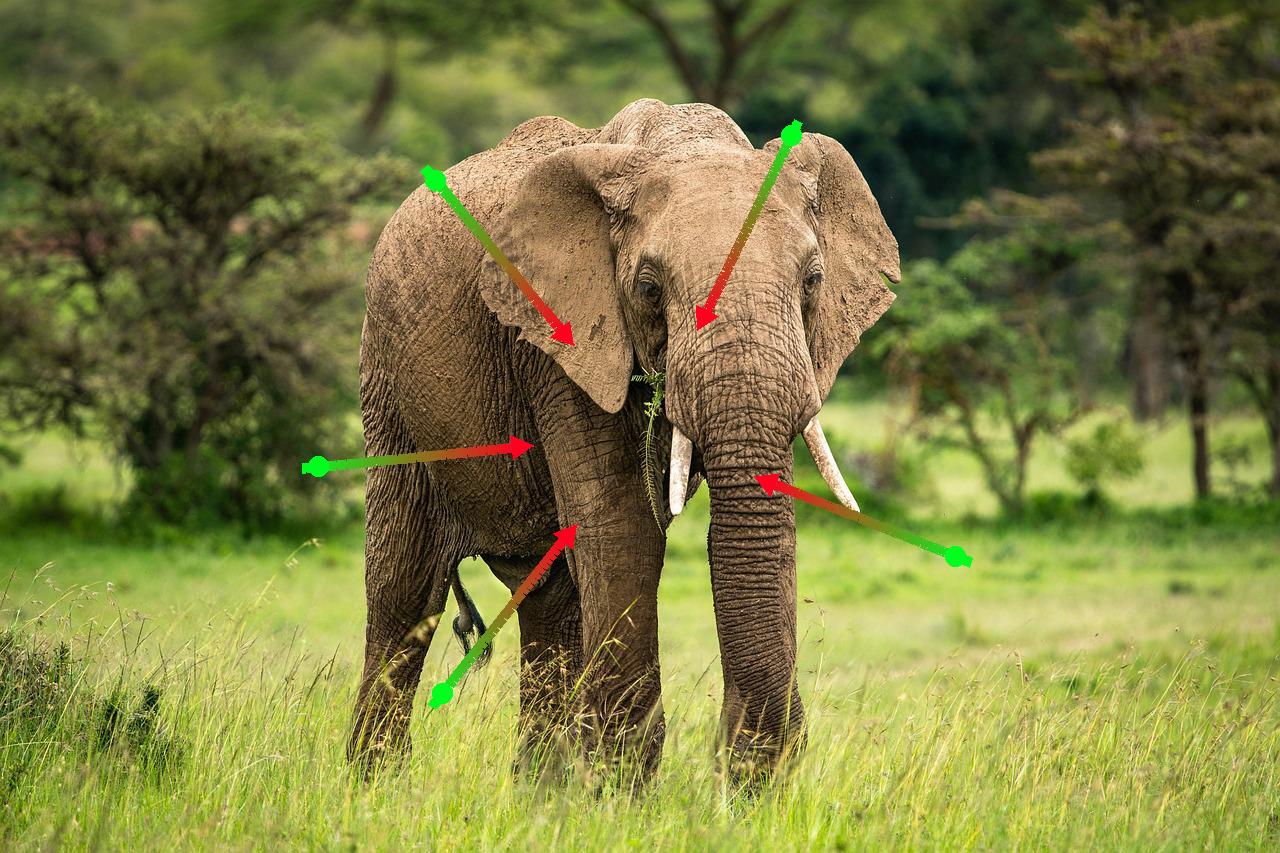

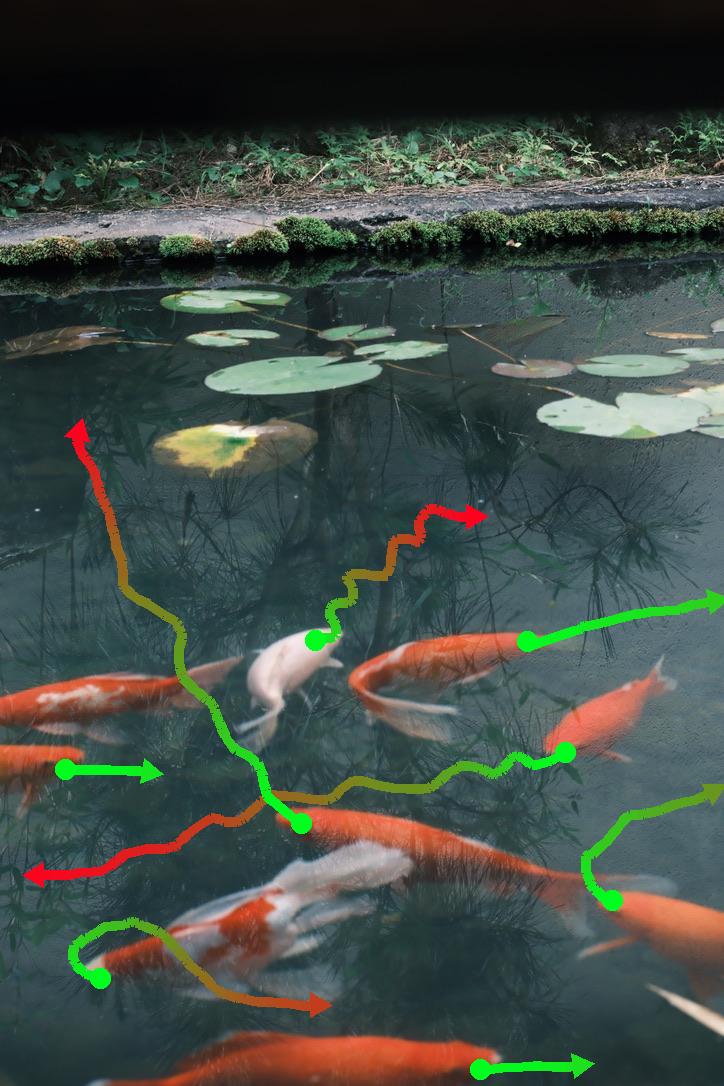

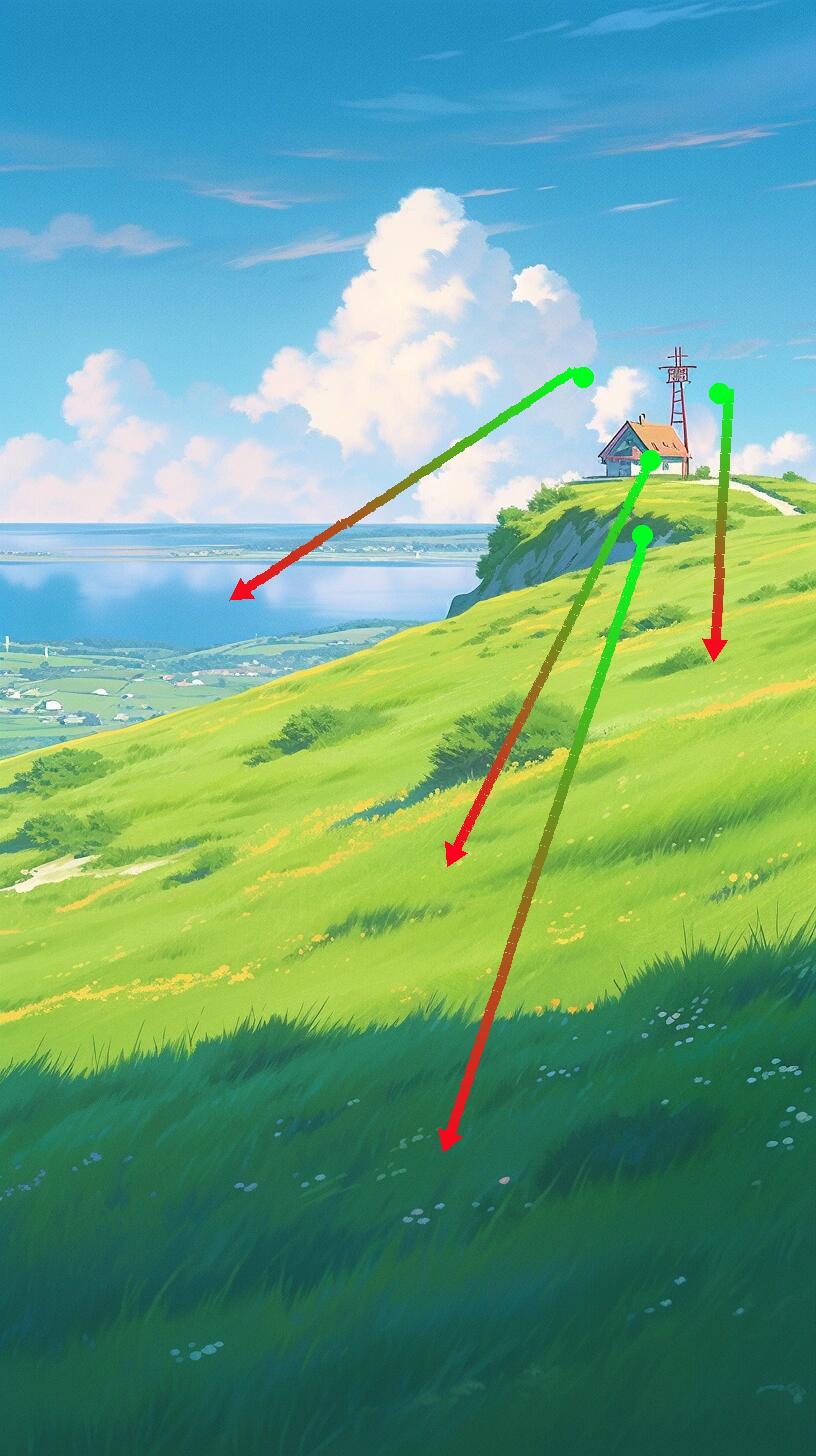

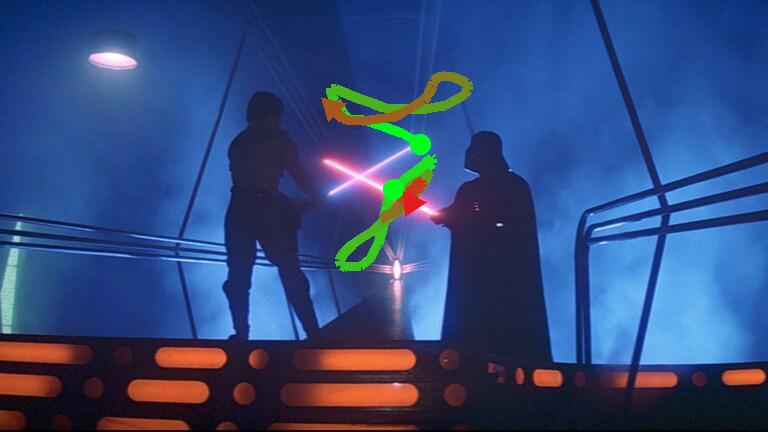

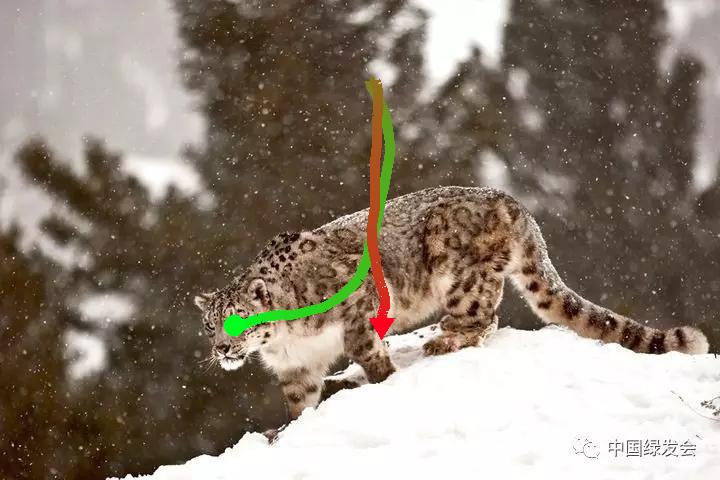

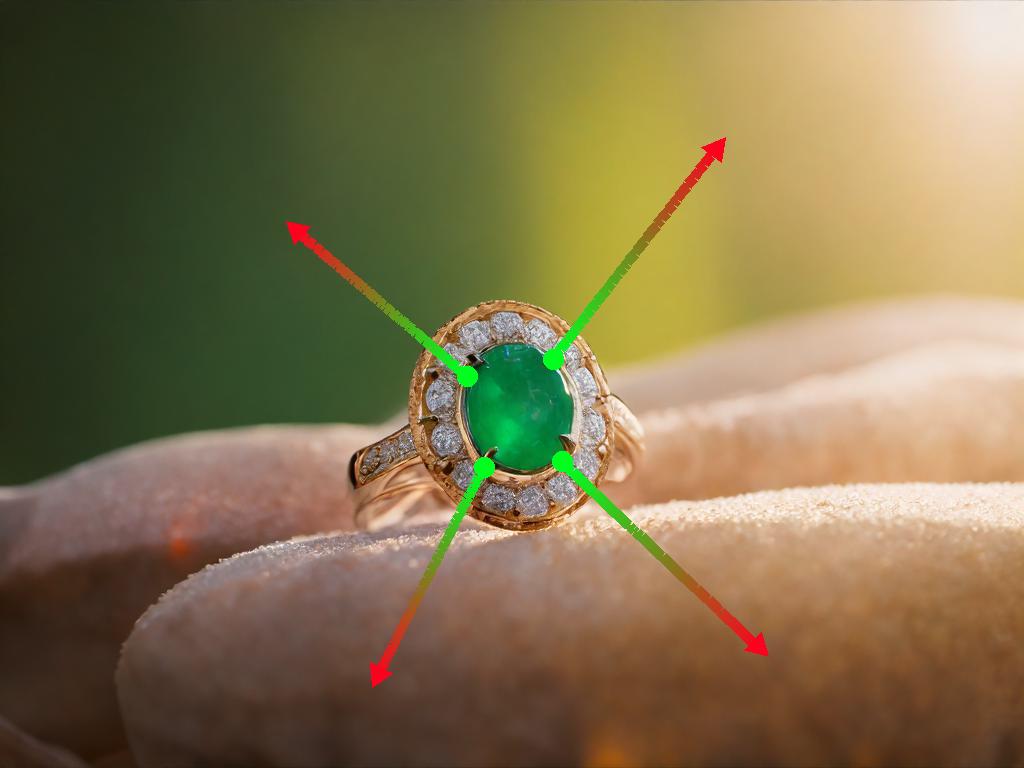

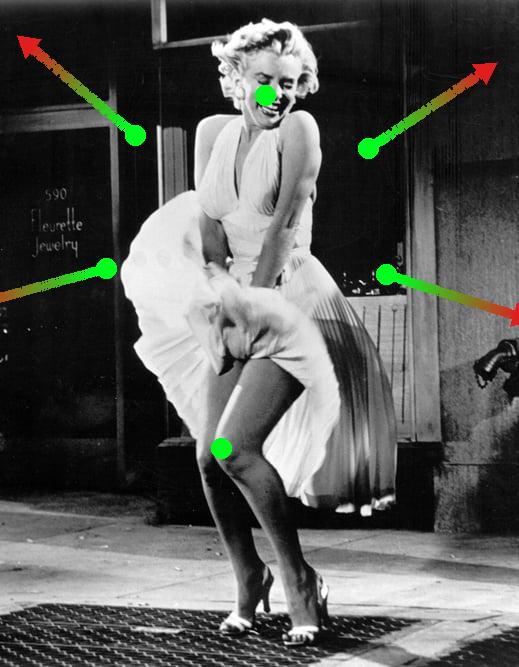

We provide an interactive editor tools for creating trajectory. This tools allowing user to create free draw trajectories, camera zoom in or out trajectories, as well as edit trajectory and applying camera pan move globally.

Left: process of user drawing trajectories. Right: generated video.

Note: all results are generated once a time without any cherry pick.

Note: all results are generated once a time without any cherry pick.

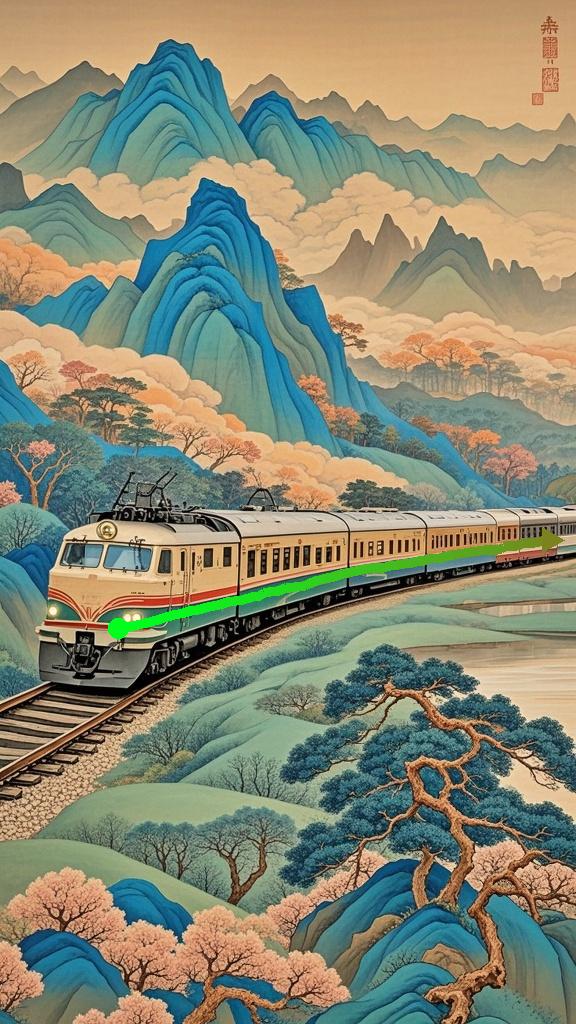

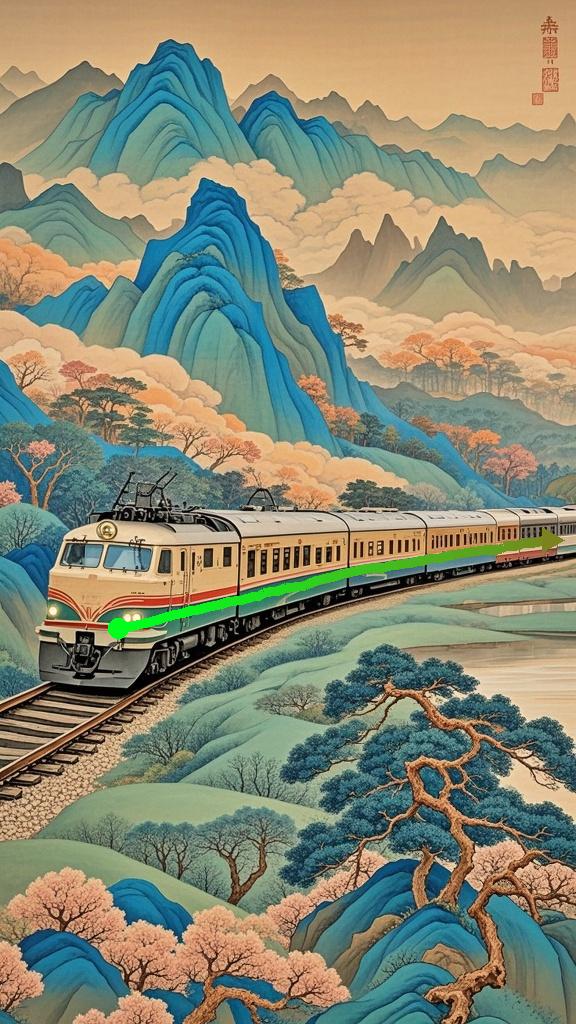

ATI can mimic a video by extracting its motion dynamics along with its first-frame image. Moreover, by leveraging powerful image-editing tools, it also enables "video-editing" capabilities.

| Reference Video (for Extracting Motion) | First Frame Image | Generated Video |

|---|---|---|

|

||

|

||

|

We sincerely acknowledge the insightful discussions from Bo Liu, Yizhi Wang, Alex, Xueqin Deng, and Linjie Yang. We greatly appreciate the help from Liming Jiang for website build.

If you find ATI useful for your research or applications, please cite our paper:

@article{wang2025ati,

title={{ATI}: Any Trajectory Instruction for Controllable Video Generation},

author={Wang, Angtian and Huang, Haibin and Fang, Zhiyuan and Yang, Yiding, and Ma, Chongyang}

journal={arXiv preprint},

volume={arXiv:2505.22944},

year={2025}

}